Writing in the Age of AI: Errors, Eccentricity, and Ego

In which I engage in an extended, and somewhat clunky Star Wars metaphor. And eventually conclude that clunkiness is sort of the point.

I.

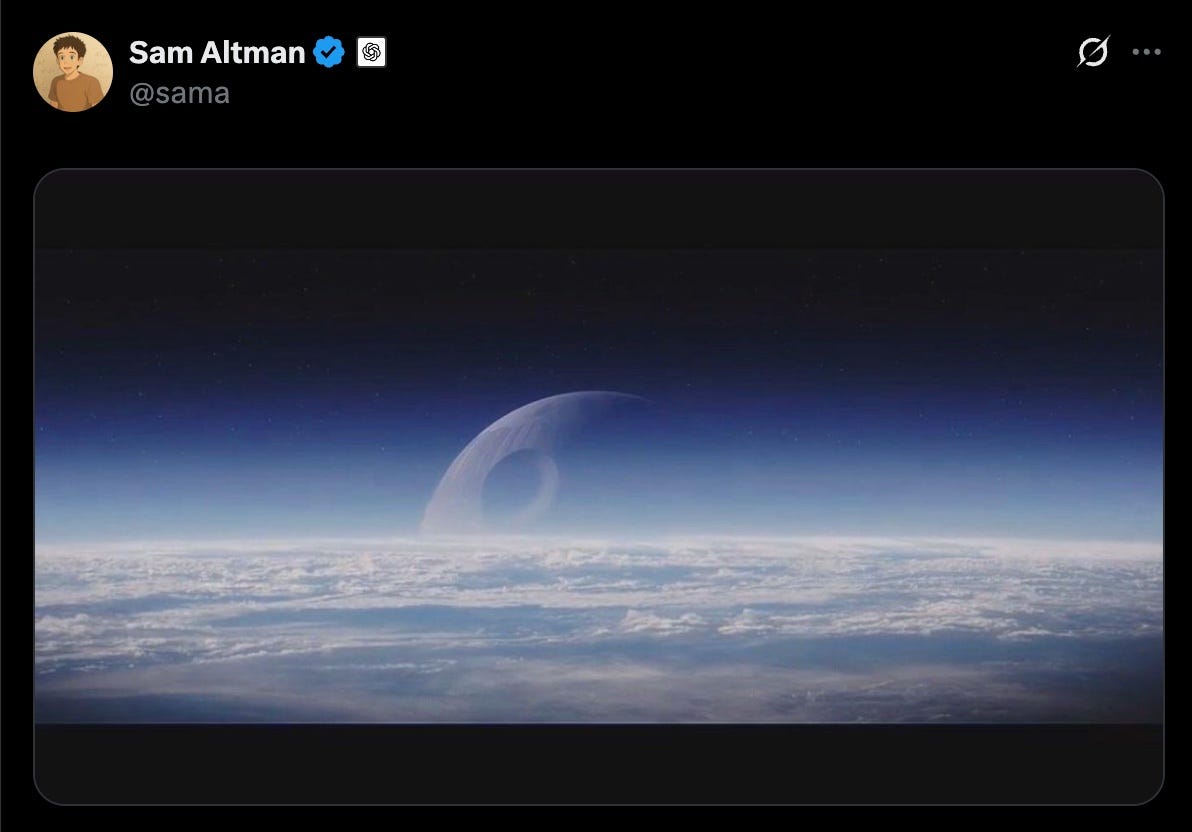

You may have seen or heard about Sam Altman’s announcement of ChatGPT-5:

It’s an image of the Death Star rising up over the horizon of Scarif from the movie Rogue One. For those of us trying to carve out a niche on the internet through writing, the image of AI as the Death Star was very appropriate. It hangs over our world with the promise of eventual destruction. We’re living under the capricious and iron fist of the Empire now and we need to figure out how to deal with that.1

Obviously living under the rule of this new evil (or at least an all-encompassing) empire brings with it a host of deep philosophical questions. But there are also a significant number of quotidian concerns as well. With respect to AI I’ve already come up with answers to questions like:

Should I use it to polish my prose? No. My prose is my own, and the bumps and imperfections it contains is part of the charm. (A point I’ll expand on.)

Should I use it to read my posts and create an audio version? Only for my book review posts, not the rest. The ambitious part of me feels I should actually read everything, to put just the right emphasis on it. The busy/lazy part of me would like to use the AI voice with everything. This is the compromise I’ve arrived at.

Should I use it for research? Definitely, I think this may be the thing AI is the best at. Though one should still make sure to independently confirm anything going into a post. Also I already see myself wasting too much time on minutia because queries are so easy.

Beyond this I’ve also discovered that AI saves me time when I’m reading and reviewing books. To give one example, I can upload a copy of the book and use AI to find passages that I only hazily remember. The kind of thing where a normal search brings back too many results. Or none at all because I’m sure that I remember the word “contract” was in there and it’s actually “agreement”. (Does this happen to anyone else?) This allows me to be far less scrupulous about my note-taking.2 AI works as a very sophisticated command-f function. That said, you should always take any excerpt AI gives you and double check that the excerpt actually appears in the book. Things have gotten better, but it wasn’t all that long ago that I caught ChatGPT making up passages from the Aeneid.3

I also use it to check my reviews. For example, after coming up with my summary of a book I’ll ask the AI to critique my summary. Generally it will tell me that I missed some nuance and offer to rewrite it for me. I always turn down the rewrite, but I’ll consider incorporating things it claims I missed. I draw the line at using actual AI copy in my posts, though occasionally it will come up with a term and I’ll steal that. As an example it used the label “data-driven contrarian” for Steve Sailer. I thought that was a good way of describing him, so I incorporated it into my recent review of his book.

So that’s how I live day to day under the threat of annihilation, but what about the larger questions I alluded to earlier? When you’ve got a huge writer-destroying battlestation hanging on the horizon, a surprising number of people will ignore it and just go about their daily lives. But some people will try to fight back. Some will join the rebellion. What does that look like?

II.

The Death Star was terrifying because it could blow up planets. AI isn’t going to do that (at least LLMs aren’t) but it does threaten to bury us in slop. Instead of a giant beam of destructive energy, imagine instead an endless stream of competent, but vaguely vanilla blog posts, like a giant fire hose, gradually submerging the entire landscape.

Arguably in some areas this has already happened. (I think the shorter the form, the worse the ensloppification.) So far Substack appears to be holding out, at least my corner of it. It might even be said to be the Yavin IV of our rebellion. (If we’re going to continue with Star Wars metaphors.) It helps that in order to earn money you have to have real humans actually choosing to subscribe. But actual humans are dumb, and I’m sure there are people who have already paid to subscribe to newsletters written mostly by AI. Every rebellion has its double agents, and every empire its useful idiots.

If we’re going to be part of the rebellion it’s not enough to avoid the slop, we have to fight it. Perhaps the concessions I’ve already made undermine my commitment? Or perhaps this whole rebellion metaphor is at the end of its usefulness? My big point is that we’re soon arriving at a moment, if we haven’t already, where people using AI will be able to trivially produce a thousand times as much content as a “traditional” writer. Or even a writer who uses AI selectively like I do. Given this, what can we do? How does one stand out? Or should we all just give up now?

Let’s assume that you’re not going to give up, I’m not, though the thought has crossed my mind. (The thought of ceasing to write, not turning it all over to LLMs.) Then the next question is, what does it mean to stand out? Does that entail having thousands of subscribers? Does it mean rising above the AI slop in some fashion? And how much does it depend on people reading our stuff and saying, “Well that definitely wasn’t written by an AI”?

It seems like that last point is more important than I would like it to be. Or maybe it’s more concrete. Certainly people are already interested in trying to ferret out the AI. Notice the recent claim that the em dash is proof positive your piece was written by AI. Obviously this is not the case. (Mary Shelley used the em dash gratuitously.) But it’s evidence we’re in a moment when people will latch onto anything.

What is one to do? I have some ideas for how I’m going to handle it, and I’m putting them down both by way of planting a flag, but also in hopes that other people might benefit.

III.

For those watching Star Wars (maybe the metaphor has some life left after all) you might notice that the people participating in the Rebellion are individualized, while the Empire is largely represented by identically outfitted storm troopers. Also the members of the Rebellion are not just individuals, they’re eccentric, bordering on crazy.4 I think there’s a lesson here. I know that AI is pretty good at mimicry, heck I have used it to generate a very convincing clone of my voice, but in order to mimic a voice you have to have a voice. And those entering the space, just hoping to up their follower count, will have no voice for the AI to mimic. Beyond that I think they’ll eschew eccentricity regardless, opting instead for something approaching the lowest common denominator.5

The eccentricity standard is not foolproof. AIs can certainly mimic it to a certain extent. I’m sure there’s someone out there who’s already issuing prompts that start with “In the voice of Hunter S. Thompson”. But as I already said, I suspect there will be a low return on such efforts and moreover that attempts to be weird will be easier to pick out than attempts to be universally appealing. Nevertheless things are changing pretty quickly, and we are probably still many years out from truly knowing what changes AI will bring to society. I merely contend that eccentricity is probably the best option out of a batch that are all less than ideal.

I don’t think I have an especially eccentric voice, but there are eccentric elements in my writing I’m going to lean into more. You may have already noticed the copious footnotes in this post. I suspect, though cannot prove, that AI is still not great at inserting personal stories or clever asides into footnotes. Heretofore I have restrained my urge to do so whenever a thought strikes me. Going forward I’m going to unleash it. (Or at least take it out for more frequent walks. Also it’s apparently not just footnotes, but parentheticals as well.)

Beyond eccentricity, there is also fallibility. AIs don’t make mistakes. Or at least they make very few mistakes in the realm of prose styling. They can make some truly colossal mistakes when we consider hallucinations, but such hallucinations will be tightly written with excellent word choice. AIs are polished but sometimes wrong. The chief way to set yourself apart is to always be right, and failing that, to be at least a little bit rough. For me this means I’m going to be less intent on smoothing out every last rough edge of my writing. (Not that I was fantastic at it, but I tried, perhaps too much.) If my first instinct is to say something long and somewhat convoluted. I may just leave it that way.

Even if my voice is only moderately eccentric, and even if one can take “roughness” only so far before it bleeds into the torturous, there is still an enormous space open in the realm of eccentric ideas. Anyone attempting to game the system with AI is going to go for really popular ideas. None of my ideas are particularly popular. And it’s in this area that I hope I’ve already done much to distinguish myself. While everyone else was writing about good guys and bad guys in the Israel-Hamas-Gaza mess, I was writing about legibility. My book reviews frequently involve picking out a single item and then mercilessly dissecting it. And my book (which is still coming, summer was busy) is a screed in opposition to an obscure discipline that most people have never heard of, and certainly aren’t searching for objections to.

Above and beyond all of the foregoing. One hopes that actual writing might contain something ineffable, and spiritual—a motivating spark, massive unexplored depths, a sense of the transcendent. Not always. Such things are necessarily rare, but occasionally. On some level that’s why I continue to write. And I suspect that’s why you continue to read (not just my stuff, but all the stuff). As the greatest writer of all time said.

There are more things in heaven and earth, Horatio, than are dreamt of in your philosophy.

As it turns out AIs dream/hallucinate far more than we expected. But there are things beyond those dreams, beyond their philosophy. That’s where I hope to set up camp. I hope you’ll join me.

Of course I have already set up an actual camp. Here on wearenotsaved.com. It’s like a vacation home. You know how people would like to spend all of their time there, but in the end they spend a few rush weekends each year? That’s where I’m at. But in something of an irony, now that the actual vacation time is over, I hope to be spending more time here. Writing somewhat clunky stuff that was definitely not composed by an AI.

Later Altman went to great pains to clarify that OpenAI is the Rebellion, but no one was buying it for even a second.

Particularly since I’ve noticed that no matter how scrupulous I am about taking notes, when it comes time to review the book, often the general point I’m trying to make will not be represented very well by my specific notes. It takes a different quote to explain the whole thing than the quotes that attract me when I’m in the middle of reading.

Lots of ink has been spilled on the limitations of AI. A subject I find endlessly fascinating, but a significant tangent to my current point. But there are a couple of anecdotes worthy of footnoting. First, the passages it made up for the Aeneid were quite impressive. It’s great at mimicking style. Second, even ChatGPT-5 seems powerless in the face of short story collections. Maybe it’s the fact that it has a hard time distinguishing multiple different styles and plots within the same book? But even when I asked it to focus on just one of the stories it still went entirely off the rails and entirely made up a completely different story!

I cannot recommend Andor strongly enough. Both as an illustration of the eccentricity I’m talking about, but also as just really great TV. And possibly the best Star Wars product since Empire.

There is the possibility that someone who has worked hard to establish a style and a voice will have so much content that they’ll be able to switch to AI and no one will notice. I’m looking at you Matt Yglesias.

"Are you expecting that [writing with LLMs] eventually will be much easier?"

It's weird, because it seems like it should be easier now. I'll discuss content with the model at length. In conversation it communicates in lucid, spare prose and gives the impression of understanding. It stays on message when I ask it to prepare an outline, but then when I say, "Okay, go ahead and compose the first draft of section one," it flips from critical thinker to creative writer and suddenly gets much less smart. It sacrifices clarity for flourish and offers poetic metaphors that don't map cleanly onto the subject but which probably sound like "good writing" to someone with no training or experience in constructing or evaluating rational arguments.

GPT-5 is not as bad in this respect as GPT-4o, but it's still a struggle. And it's still quite frustrating.

You mentioned that LLMs don't make the same sorts of compositional errors that humans do, but you'll sometimes find them in Immutable Mobiles posts. Not because the model inserts them in an effort to give the impression of human authorship but because I get tired of the editorial back and forth and just re-write parts of the post myself without telling the model. That's where the errors creep in.

This was a funny piece, I enjoyed it.

However, keep in mind AI/LLM are a moving target. Today they might have tight, polished prose, after an update they might be meandering and eccentric.

I'd say the real trick will be to write authentically about what you care about and not worry about standing out from the muck, but I recognize some writers would actually like to make some spare change from the craft. Good luck to you.